Build Generative Voice AI apps with our ASR/Speech-to-Text and LLM-powered NLU APIs. Record & Transcribe meetings, contact center calls, videos, etc. Get LLM-powered Summary, Sentiment and more. Build Conversational Voice Assistants that integrate with your Contact Center platform. Get started with our developer-first platform today..

APIs for Developers • AI Assistant for Meetings • Conversational Voice Assistants for Call Centers

Voicegain’s deep learning ASR offers an unbeatable combination of accuracy, price and flexibility. Voicegain ASR can be deployed on-premise, in your VPC or invoked as a cloud service. We integrate out-of-the-box with leading contact center, video meeting and bot platforms.

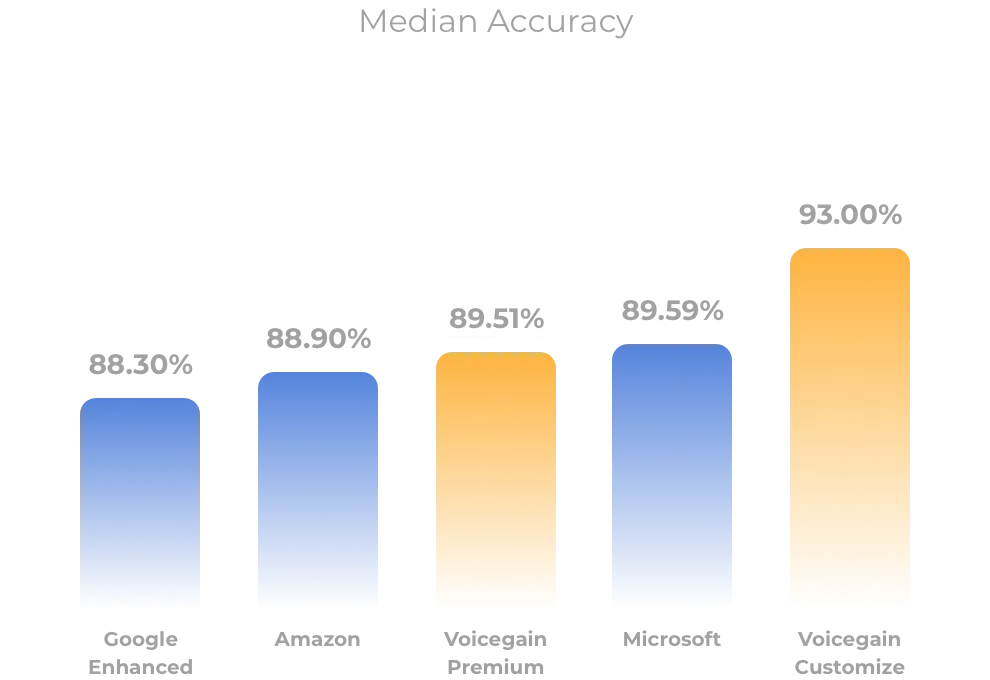

Voicegain’s out-of-the-box accuracy – for both batch and streaming speech recognition - are on par with the very best. But you can achieve accuracy in the high 90s when you train with your data.

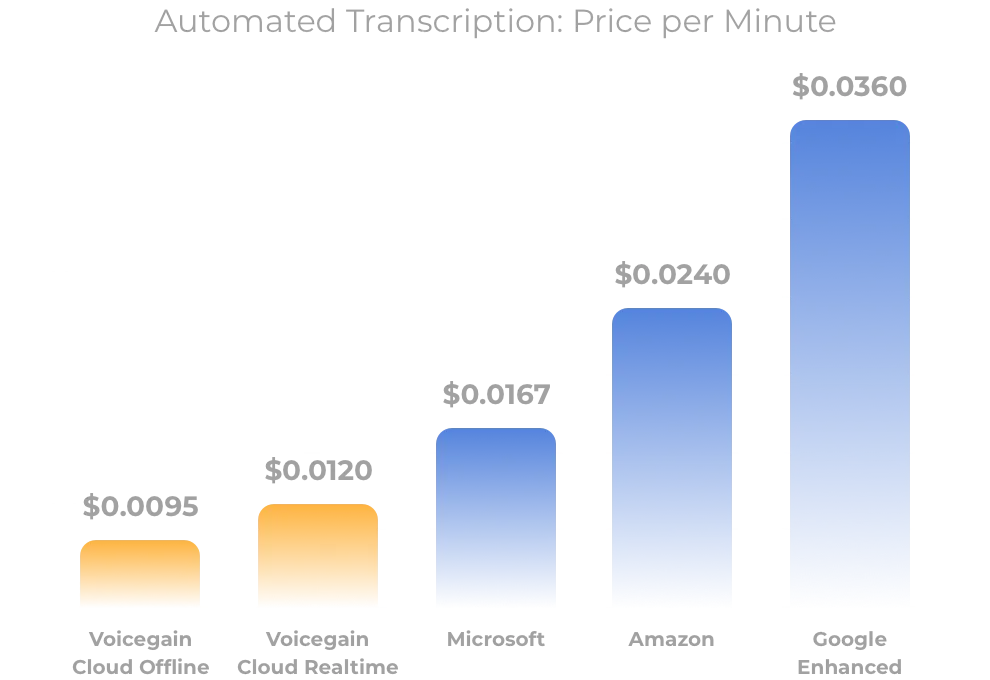

Voicegain is priced 50%-75% lower than the large Cloud Speech-to-Text players. Our Edge pricing is also very affordable compared to competing options.

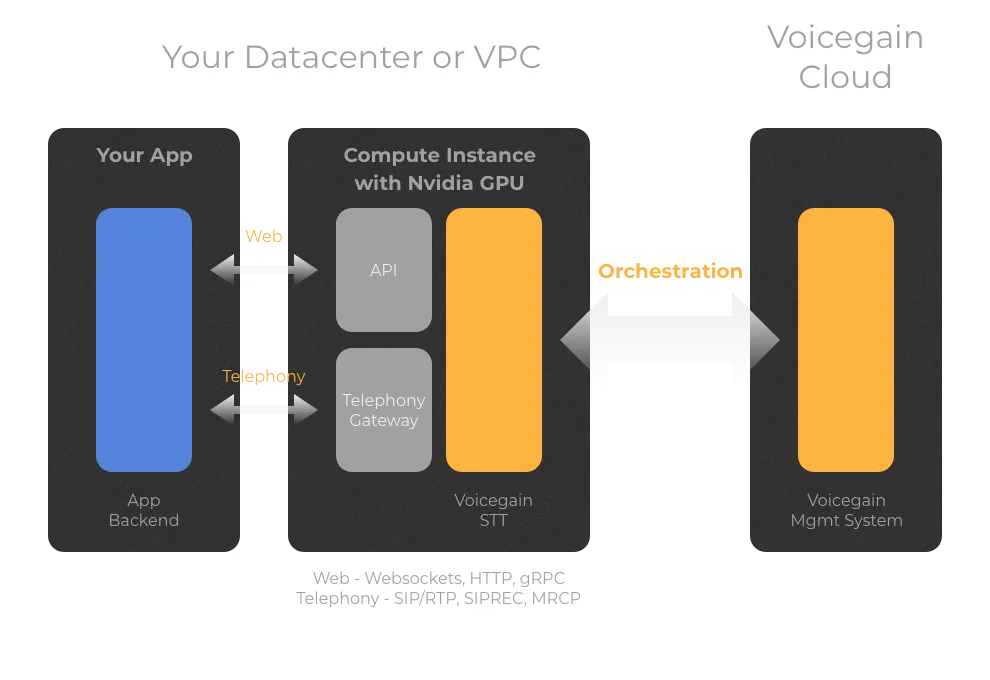

Access Voicegain on our multi-tenant Cloud. Or deploy it in your Datacenter or VPC. Use your existing audio infrastructure and integrate with a protocol of your choice.

Our ASR is built on most recent advances in deep learning. We utilize end-to-end transformer-based deep neural networks and we have trained it with several tens of thousands of hours of diverse audio datasets.

APIs to embed transcription into your app and build voice bots accessible over telephony. Deploy Voicegain on your infrastructure (VPC, Datacenter) or use our cloud service

Get your own AI Meeting Assistant to automate note taking. Always know who said what when and where! Integrates with video meeting platforms like Zoom, Microsoft Teams and Google Meet. Edge (On-Prem or VPC) options available.

Automate Quality Assurance and extract CX insights from voice interactions in contact center. White-label or Source Code License of UI available.

Voicegain, the leading Edge Voice AI platform for enterprises and Voice SaaS companies, is thrilled to announce the successful completion of a System and Organizational Control (SOC) 2 Type 1 Audit performed by Sensiba LLP.

Read more →

LLMs like ChatGPT and Bard are taking the world by storm! An LLM like ChatGPT is really good at both understanding language and acquiring knowledge of this content. The outcome of this is almost eerie and scary. Because once these LLMs acquire knowledge, they are able to answer very accurately questions that in the past seemed to require human judgement.

One big use-case for LLMs is in the analysis of business meetings - both internal (between employees) and external (e.g conversations with customers, vendors, etc).

In the past few years, companies have been primarily using multi-tenant Revenue/Sales Intelligence and Meeting AI SaaS offerings to transcribe business conversations and extract insights. With such multi-tenant offerings, transcription and natural language processing takes place on the Vendor cloud. Once the transcript is generated, NLU models offered by the Meeting AI vendor is used to extract insights. E.g, Revenue intelligence products like Gong extract questions and sales blockers in sales conversations. Most meeting AI assistants extract summaries and action items.

Essentially these NLU models - and many of these predate the LLMs - were able to summarize, extract topics, keywords and phrases. Enterprises did not mind using the cloud infrastructure of the vendor to store the transcripts as what this NLU could do seemed pretty harmless.

However the LLMs take this to a whole different level. Our team used Open AI Embeddings API to generate embeddings of our daily meeting transcripts that were conducted over a one-month period. We stored these embeddings in an open-source Vector database (our knowledge-base). During testing, for each user question, we generated embedding of the question and queried the vector database (i.e knowledge-base) to get related/similar embeddings.

Then we provided these related documents as context and the user question as a prompt to GPT 3.5 API so that it could generate the answer. We got really really good results.

We were able to get answers to the following questions

1. Provide a summary of the contract with <Largest Customer Name>.

2. What is the progress on <Key Initiative>?

3. Did the Company hire new employees?

4. Did the Company discuss any trade secrets?

5. What is the team's opinion on Mongodb Atlas vs Google Firestore?

6. What new products is the Company planning to develop?

7. Which Cloud provider is the Company using?

8. What is the progress on a key initiative?

9. Are employees happy working in the company?

10. Is the team fighting fires?

ChatGPT's responses to the above questions was amazingly and eerily accurate. For Question 4, it did indicate that it did not want to answer the question. And when it do not have adequate information (e.g. Question 9), it did indicate that in its response.

At Voicegain, we had always been a big proponents of why Voice AI needs to remain on the Edge. We had written about it in the past.

Meeting transcripts in any business is a veritable gold mine of information. Now with the power of LLMs, they can now be queried very easily to provide amazing insights. But if these transcripts are stored in another Vendor's cloud, it has the potential to expose very proprietary and confidential information of any business to 3rd parties.

Hence for businesses it is extremely critical that such transcripts are stored only in private infrastructure (behind the firewall). It is really important for Enterprise IT to make sure this happens in order to safeguard proprietary and confidential information.

If you are looking for such a solution, we can help. At Voicegain, we offer Voicegain Transcribe, an enterprise-ready solution for Meeting AI. With Voicegain Transcribe, the entire solution can deployed either in a datacenter (on bare-metal) or in a private cloud. You can read more about it here.

It has been another 6 months since we published our last speech recognition accuracy benchmark. Back then, the results were as follows (from most accurate to the least): Microsoft, then Amazon closely followed by Voicegain, then new Google latest_long and Google Enhanced last.

While the order has remained the same as the last benchmark, three companies - Amazon, Voicegain and Microsoft showed significant improvement.

Since the last benchmark, at Voicegain we invested in more training - mainly lectures - conducted over zoom and in a live setting. Training on this type of data resulted in a further increase in the accuracy of our model. We are actually in the middle of a further round of training with a focus on call center conversations.

As far as the other recognizers are concerned:

We have repeated the test using similar methodology as before: used 44 files from the Jason Kincaid data set and 20 files published by rev.ai and removed all files where none of the recognizers could achieve a Word Error Rate (WER) lower than 25%.

This time again only one file was that difficult. It was a bad quality phone interview (Byron Smith Interview 111416 - YouTube) with WER of 25.48%

We publish this since we want to ensure that any third party - any ASR Vendor, Developer or Analyst - to be able to reproduce these results.

You can see box-plots with the results above. The chart also reports the average and median Word Error Rate (WER)

Only 3 recognizers have improved in the last 6 months.

Detailed data from this benchmark indicates that Amazon is better than Voicegain on audio files with WER below the median and worse on audio files with accuracy above the median. Otherwise, AWS and Voicegain are very closely matched. However we have also run a client-specific benchmark where it was the other way around - Amazon as slightly better on audio files with WER above the median than Voicegain, but Voicegain was better on audio files with WER below the median. Net-net, it really depends on type of audio files, but overall, our results indicate that Voicegain is very close to AWS.

Let's look at the number of files on which each recognizer was the best one.

We now have done the same benchmark 5 times so we can draw charts showing how each of the recognizers has improved over the last 2 years and 3 months. (Note for Google the latest 2 results are from latest-long model, other Google results are from video enhanced.)

You can clearly see that Voicegain and Amazon started quite bit behind Google and Microsoft but have since caught up.

Google seems to have the longest development cycles with very little improvement since Sept. 2021 till about half a year ago. Microsoft, on the other hand, releases an improved recognizer every 6 months. Our improved releases are even more frequent than that.

As you can see, the field is very close and you get different results on different files (the average and median do not paint the whole picture). As always, we invite you to review our apps, sign-up and test our accuracy with your data.

When you have to select speech recognition/ASR software, there are other factors beyond out-of-the-box recognition accuracy. These factors are, for example:

1. Click here for instructions to access our live demo site.

2. If you are building a cool voice app and you are looking to test our APIs, click here to sign up for a developer account and receive $50 in free credits

3. If you want to take Voicegain as your own AI Transcription Assistant to meetings, click here.

Interested in customizing the ASR or deploying Voicegain on your infrastructure?